我们获得的成果

我们成立了国家级博士后科研工作站,现有相关研究人员68人,其中高级工程师12人,博士2人,硕士12人。

创新成果

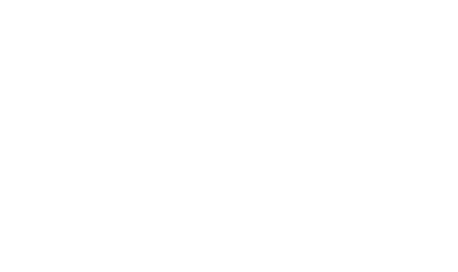

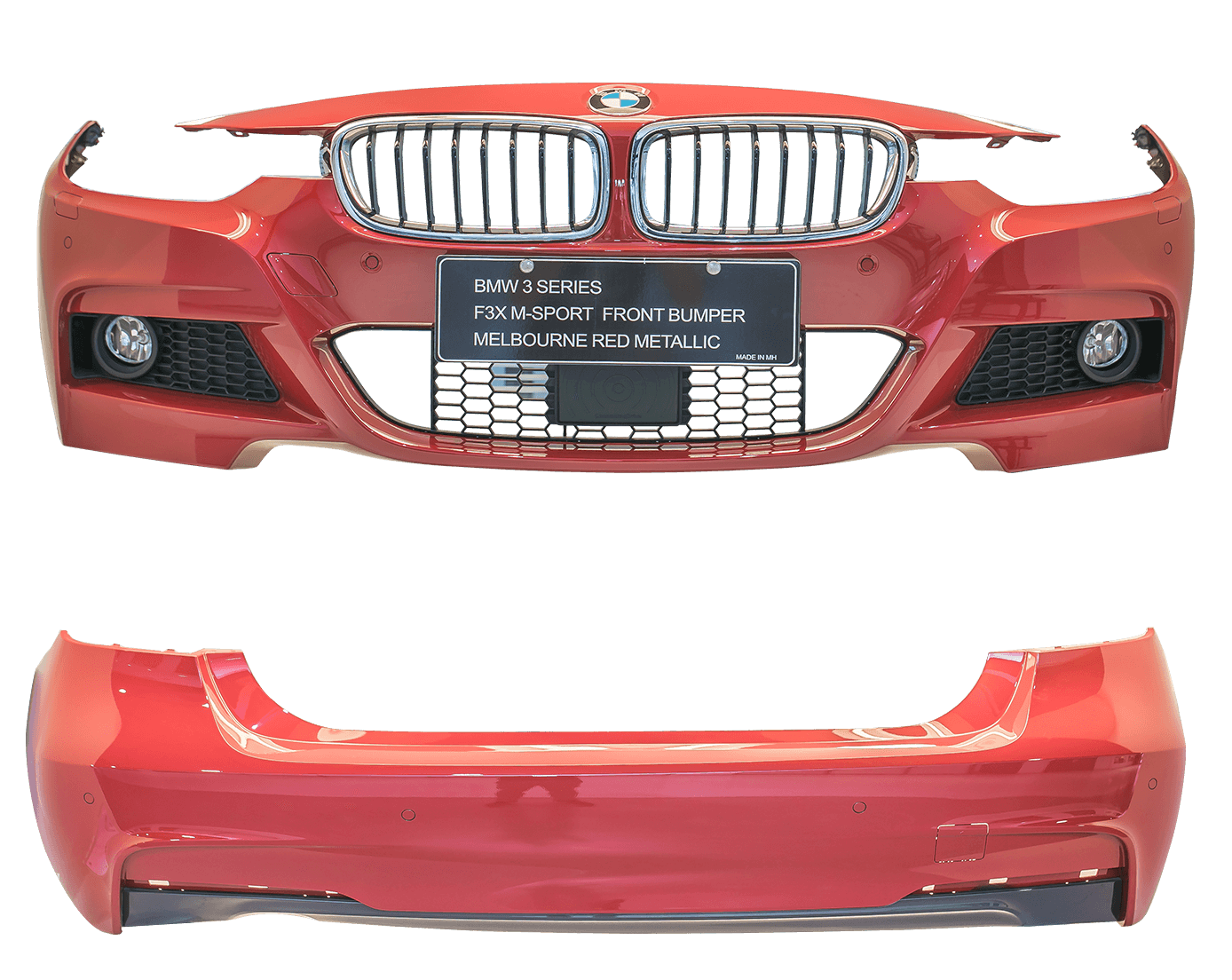

--中空吹塑扰流板工艺技术及其制品

--汽车模具标准化设计技术

其他创新成果

--别克通用彩色保险杠

国家级重点新产品

--神龙爱丽舍彩色保险杠蒙皮 国家火炬计划

--金杯阁瑞斯仪表板

国家火炬计划

--别克凯越外饰件总成

国家火炬计划

研发中心

关于我们

www.8883.net地处太湖之滨、新葡亰8883ent网扯水秀之乡 ——江苏省无锡市。公司成立于1988 年6月,1997年2月28日在深圳证券交易所挂牌交易,股票代码“000700” 【汽车板块】:公司主要从事汽车保险杠等零部件、塑料制品、模具、 模塑高科技产品的开发、生产和销售,公司年汽车保险杠生产能力达600万套以上, 是中国领先的汽车外饰件系统服务供应商。 【医院板块】:明慈医院是由www.8883.net投资兴建的一家现代化的、 具有国际服务意识和卓越诊疗水平的三级心血管病专科医院。